Memory management is a crucial aspect of any operating system, responsible for efficiently handling memory allocation, ensuring process isolation, and optimizing system performance. In Linux, memory management is a highly sophisticated subsystem designed to balance performance, security, and resource utilization.

In this article, we will explore the fundamental concepts of Linux memory management, including virtual memory, paging, memory allocation mechanisms, and optimizations that improve efficiency.

Understanding Memory in Linux

Linux uses a structured memory model that ensures efficiency, isolation, and security between user applications and the operating system. At a high level, memory in Linux is divided into two main regions:

- Kernel Space – Reserved for the operating system, including core services, device drivers, and memory management.

- User Space – Where user applications run, isolated from the kernel to prevent unauthorized access or system crashes.

One of the key features of Linux memory management is virtual memory, which acts as an abstraction layer over physical memory. Instead of directly accessing hardware, processes operate in their own virtual address space. This allows:

- Process Isolation – Each process gets its own memory space, preventing interference with others.

- Efficient Resource Management – The system can allocate memory dynamically and swap unused data to disk if needed.

- Security & Stability – Prevents user processes from corrupting kernel memory, enhancing system reliability.

What Is Virtual Memory?

Virtual memory is a crucial memory management technique in Linux that enables processes to use more memory than the physically available RAM by utilizing disk storage as an extension. This allows for efficient multitasking, memory isolation between processes, and better system stability. The Linux kernel dynamically translates virtual addresses used by applications into actual physical addresses in RAM using a component called the Memory Management Unit (MMU).

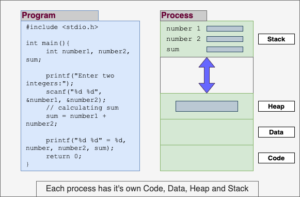

Each process is assigned its own virtual address space, which is independent of other processes and the underlying hardware. This address space is logically divided into different regions based on the purpose of memory allocation:

- Text Segment (Code Section) – Contains the compiled machine code of the program and is typically read-only to prevent accidental modification.

- Data Segment – Stores global and static variables, including both initialized and uninitialized data.

- Heap – A dynamically allocated memory region that grows as needed at runtime (e.g., via

malloc()in C ornewin C++). It expands upwards in memory. - Stack – Stores function call frames, local variables, and control flow data. The stack grows downwards in memory, and its size is managed automatically by the kernel.

Memory Layout of a Process

Paging and Memory Management Units (MMU)

What Is Paging?

Paging is a memory management scheme where physical memory is divided into fixed-size blocks called pages (typically 4 KB in size). The OS maintains a page table to map virtual addresses to physical addresses.

How Paging Works in Linux

- When a process accesses memory, the CPU looks up the virtual address in the page table.

- If the page exists in physical memory, the Memory Management Unit (MMU) translates the virtual address into a physical address.

- If the page is not in RAM (a page fault), the kernel loads it from disk (swap space) into memory.

Page Table Hierarchy

Modern 64-bit Linux systems use multi-level page tables to manage large memory efficiently:

- Page Global Directory (PGD)

- Page Upper Directory (PUD)

- Page Middle Directory (PMD)

- Page Table Entry (PTE)

Each level refines the mapping of virtual addresses to physical pages, reducing memory overhead.

Memory Allocation in Linux

1. User-Space Memory Allocation

User applications request memory dynamically using:

malloc()/free()(C standard library)mmap()(Directly maps files or memory regions)brk()/sbrk()(Used internally to grow the heap)

2. Kernel-Space Memory Allocation

The kernel itself needs to allocate memory efficiently. It uses:

kmalloc(): Similar tomalloc(), but for kernel use.vmalloc(): Allocates large contiguous memory regions in virtual memory.- Slab Allocator: A caching mechanism for frequently used objects.

3. Swap Space

When RAM is full, Linux moves inactive pages to swap space on disk, freeing up memory for active processes. However, excessive swapping (swapping thrash) degrades performance.

Memory Optimization Techniques in Linux

Efficient memory management is critical for system performance and stability. Linux employs several optimization techniques to minimize memory usage, improve speed, and prevent system crashes. Here are some key strategies:

1. Demand Paging

- Instead of loading an entire program into RAM at startup, Linux loads only the necessary pages when they are accessed.

- This reduces startup time and RAM consumption, ensuring efficient use of memory resources.

- Unused pages remain on disk until needed, reducing the system’s memory footprint.

2. Copy-on-Write (CoW)

- When a process forks, the parent and child initially share the same memory pages to save resources.

- If either process attempts to modify a shared page, the kernel creates a separate copy to maintain isolation.

- CoW optimizes memory usage and improves performance in multi-process applications like containers and virtual machines.

3. Huge Pages

- Linux traditionally uses small 4 KB pages, which require frequent Translation Lookaside Buffer (TLB) lookups, impacting performance.

- Huge Pages (2 MB or 1 GB) reduce the number of memory pages, decreasing TLB misses and improving CPU efficiency.

- Ideal for database workloads, high-performance computing (HPC), and large in-memory applications.

4. Transparent Huge Pages (THP)

- Unlike manually allocated Huge Pages, THP automatically manages large pages, reducing the need for explicit application modifications.

- THP helps optimize memory-intensive workloads without requiring developers to change their code.

- However, it may introduce latency spikes in certain workloads due to dynamic memory allocation.

5. Out-of-Memory (OOM) Killer

- When the system runs out of available memory, Linux invokes the OOM Killer to terminate non-essential processes, preventing a total system crash.

- The kernel selects processes based on memory consumption and system impact, ensuring critical services remain operational.

- Administrators can adjust OOM behavior using oom_score_adj to prioritize or protect important processes.

Final Thoughts

Memory management in Linux is a highly optimized and complex subsystem, balancing performance, security, and efficient resource utilization. From virtual memory and paging to advanced optimizations like Copy-on-Write and Huge Pages, Linux provides a robust framework to manage system memory. Understanding these mechanisms helps developers and system administrators optimize applications, troubleshoot memory issues, and enhance overall system performance.